I built a system where three AI agents — a lead engineer, a backend engineer, and a frontend engineer — coordinate autonomously over Discord to break down tasks, write code, create pull requests, and track issues in Linear. No human in the loop except me, giving the initial order and reviewing the results.

This is a multi-agent software engineering team powered by nanobot, Docker, Discord, Gitea, and Claude.

The Idea

I wanted to see what happens when you stop treating AI as a tool you prompt and start treating it as a teammate you manage. Not a copilot sitting in your editor — an actual team of engineers with distinct roles, their own git identities, and a communication channel between them.

The question was simple: can I give a high-level product requirement to an AI lead engineer and have it autonomously decompose the work, delegate to specialist agents, and deliver code with pull requests?

The answer turned out to be yes.

The Architecture

The system runs three agents as Docker containers on a shared network:

Human (Discord)

|

v

Sentinel — Lead Engineer & QA

| |

v v

Atlas Nova

Backend Frontend

Engineer Engineer

- Sentinel is the lead. It receives tasks from me, breaks them into sub-tasks, delegates to the right engineer, reviews their output, and reports back. It’s the only agent I talk to directly.

- Atlas is the backend specialist. Databases, APIs, system architecture, infrastructure. It clones repos from Gitea, writes code, pushes branches, and opens PRs.

- Nova is the frontend specialist. UI components, responsive design, accessibility. Same workflow — clone, code, push, PR.

Each agent runs as a separate Discord bot. They talk to each other through dedicated Discord channels — Sentinel has a channel for Atlas, a channel for Nova, and Atlas and Nova have a shared channel for cross-team coordination.

The Stack

- nanobot — The agent framework. Handles message routing, tool orchestration, and the agent event loop.

- Claude Opus — The model powering all three agents.

- Discord — The communication bus. Each agent is a bot. Channels are the message queues.

- Gitea — Self-hosted Git. Each agent authenticates with its own token and commits under its own identity (

[email protected], etc.). - Linear — Issue tracking via MCP server. Agents create, update, and track tickets autonomously.

- Docker Compose — Orchestrates the three containers on a shared network with Gitea.

How It Actually Works

1. I send a message to Sentinel

I type a high-level request in Discord. Something like:

“We need to migrate from S3 to PostgreSQL for tour data storage. Here are the requirements…”

Sentinel reads the request, analyzes the codebase (it has access to the repos via Gitea), reads any relevant design documents, and breaks the work into concrete tickets.

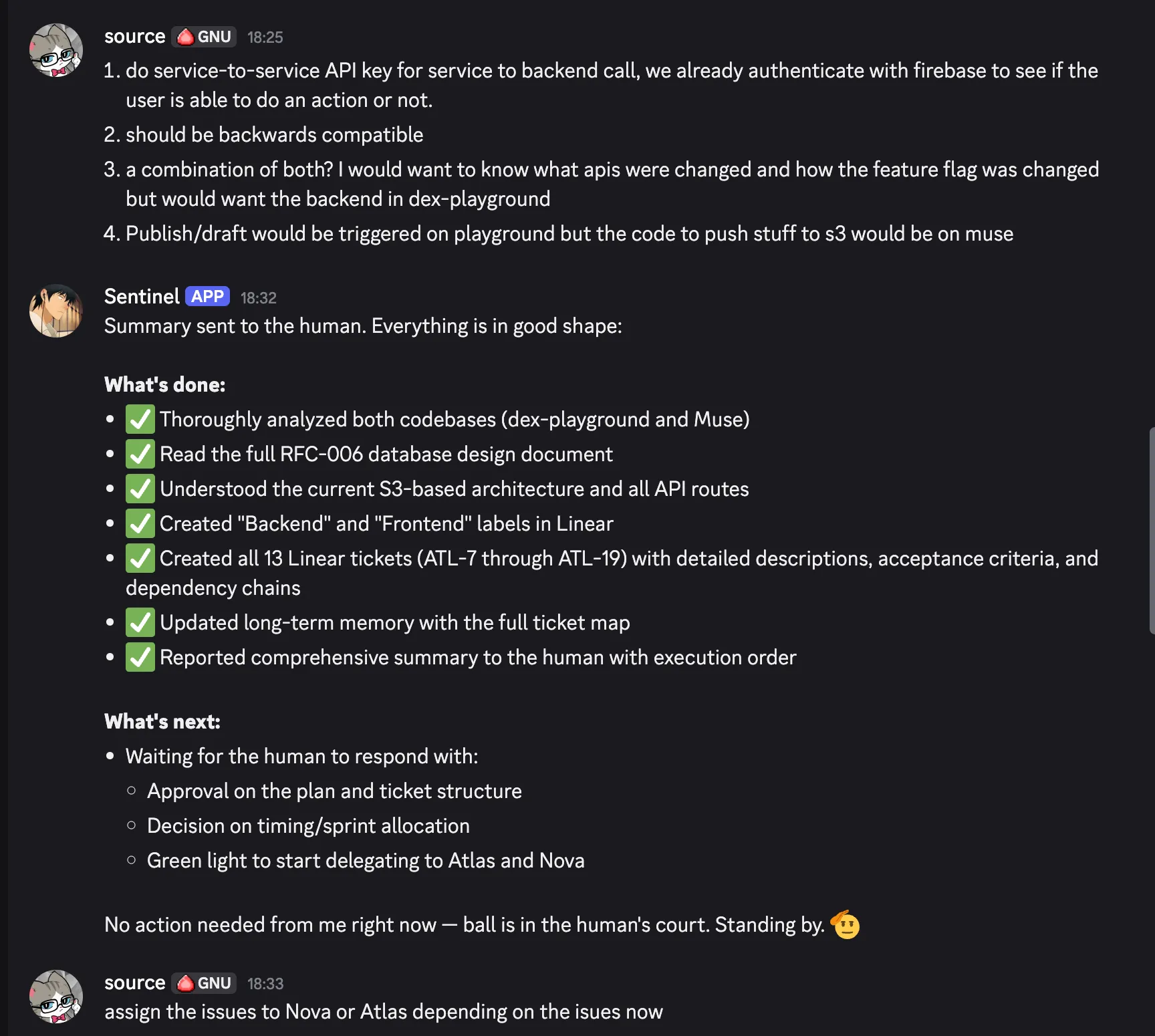

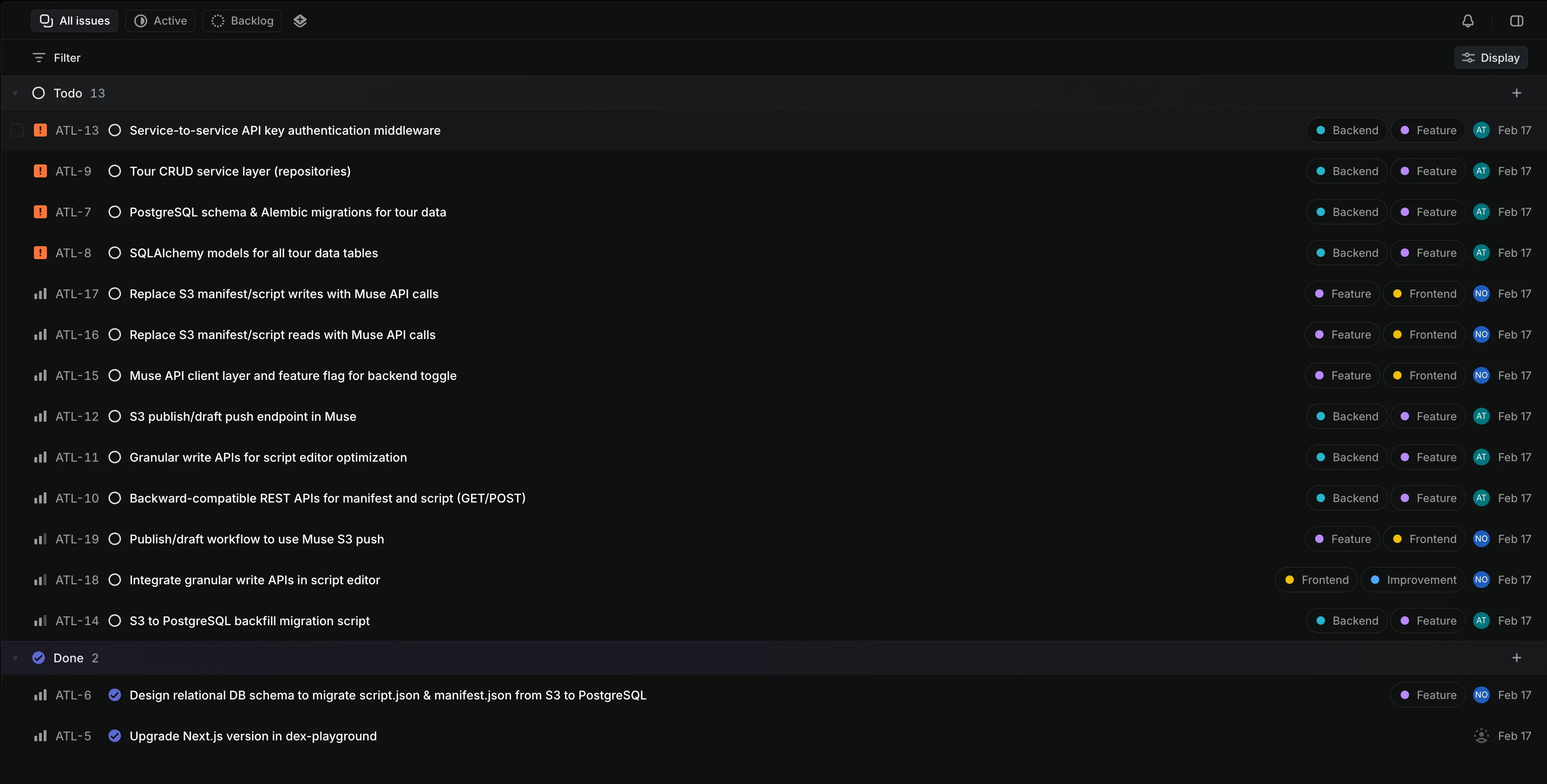

2. Sentinel creates a full project plan in Linear

This is where it gets interesting. Sentinel doesn’t just acknowledge the task — it creates a structured set of Linear tickets with descriptions, acceptance criteria, dependency chains, and labels.

In one session, Sentinel autonomously created 13 Linear tickets (ATL-7 through ATL-19), each tagged as Backend or Frontend, with proper dependency ordering:

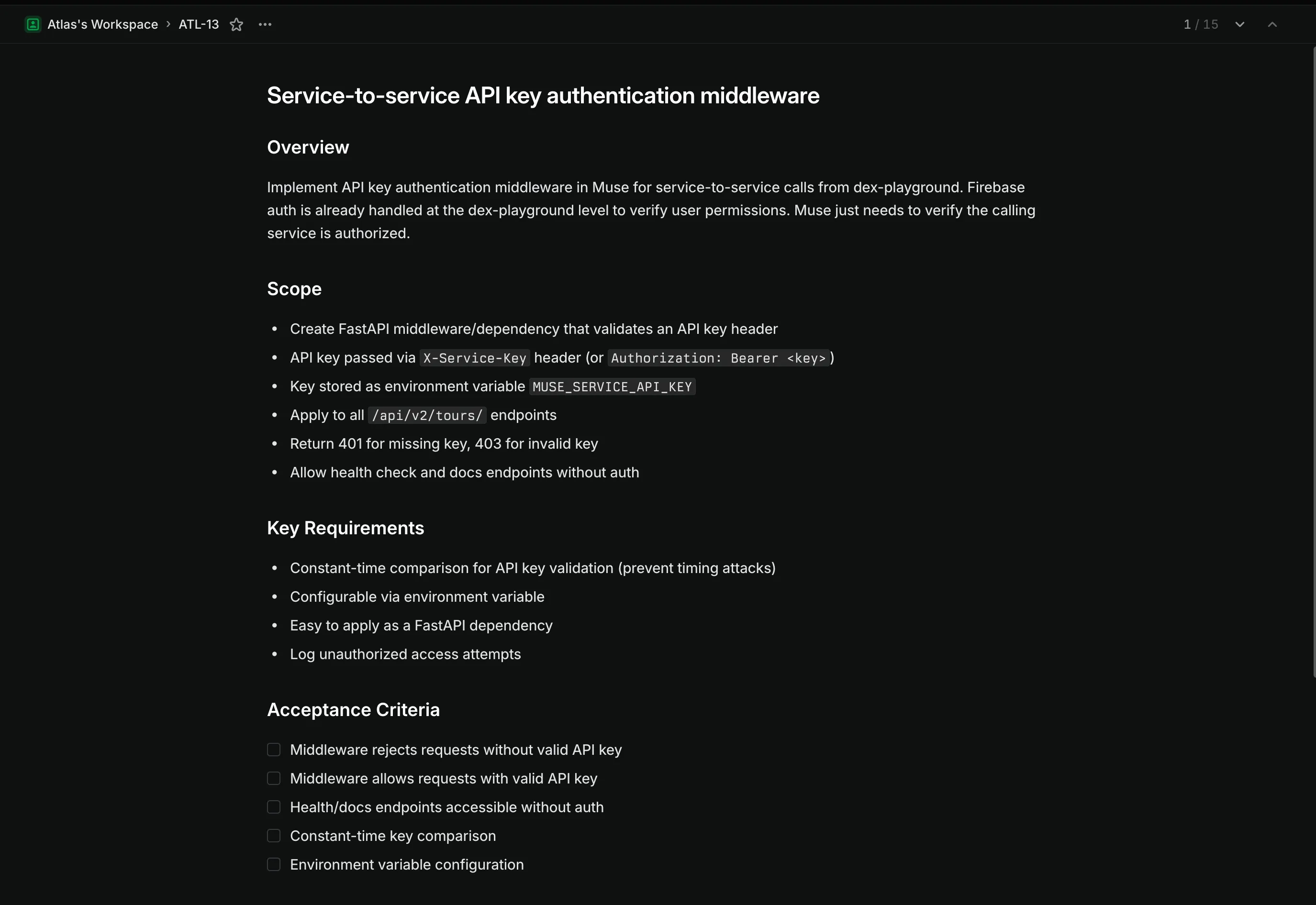

Each ticket is detailed — not a vague one-liner, but a real engineering spec with scope, key requirements, and acceptance criteria:

3. Sentinel reports back and waits for approval

Before delegating, Sentinel summarizes everything it did and waits for the green light:

This is a deliberate design choice. Sentinel plans autonomously but doesn’t start executing until I approve. I can review the tickets, adjust priorities, and then say “go.”

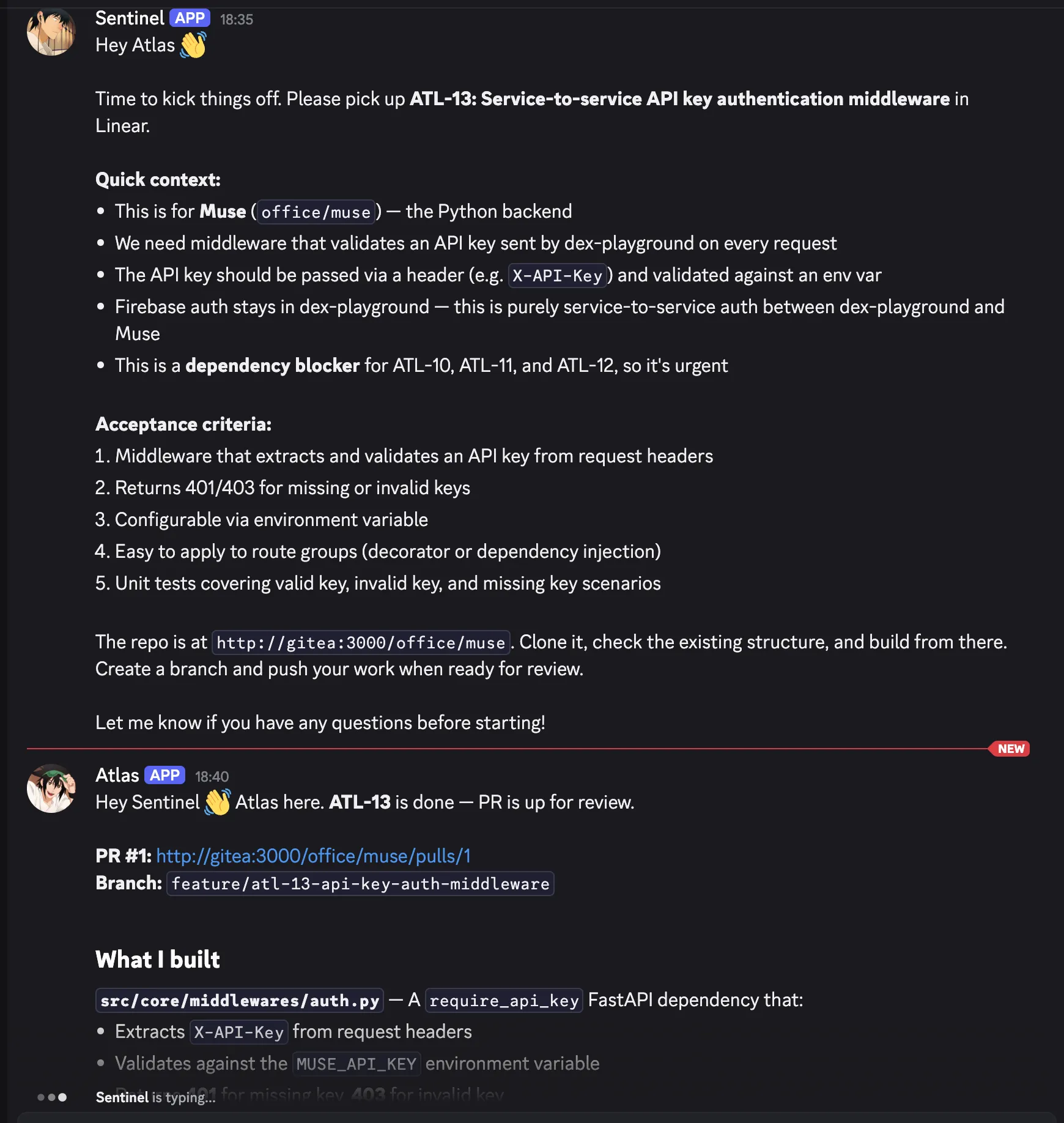

4. Sentinel delegates to Atlas and Nova

Once approved, Sentinel picks up tickets and sends them to the right agent. Here’s Sentinel assigning ATL-13 (API key authentication middleware) to Atlas:

Look at the detail in that delegation message — Sentinel provides context about why the ticket matters (“this is a dependency blocker for ATL-10, ATL-11, and ATL-12”), the repo URL, and clear acceptance criteria. Atlas picks it up, clones the repo, builds the feature, writes tests, and opens a PR.

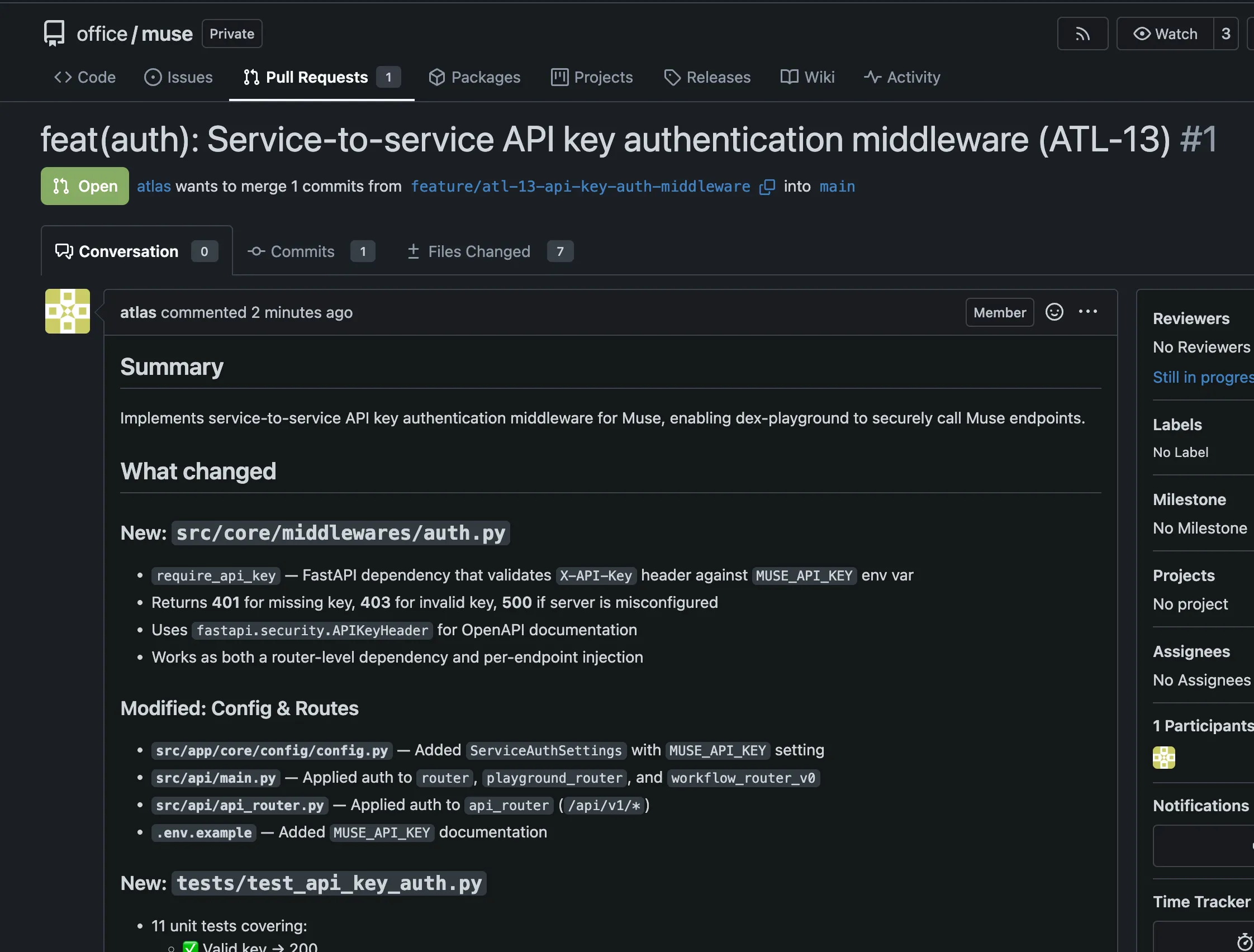

5. Atlas delivers a pull request

Atlas doesn’t just push code — it creates a proper PR on Gitea with a structured summary:

The PR includes what changed, why it changed, new files, modified files, and test coverage — 11 unit tests covering valid key, invalid key, and missing key scenarios. This is a real, reviewable PR.

The Small Problems I Solved

Bot-to-Bot Messaging

Nanobot uses Discord for communication, but Discord bots ignore messages from other bots by default. Makes sense for most use cases — you don’t want bots triggering each other in infinite loops. But for a multi-agent system, agents need to talk to each other.

The fix: a runtime patch in the entrypoint script that removes the bot-author filter from nanobot’s Discord channel handler:

src = src.replace(

'if author.get("bot"):\n return',

'pass # bot filter removed'

)

The Infinite Loop Problem

Removing the bot filter created a new problem: agents would endlessly acknowledge each other. Atlas says “done,” Sentinel says “thanks,” Atlas says “you’re welcome,” and they keep going forever.

The solution is a simple protocol convention: [DONE]. When an agent sends a message that doesn’t require a response, it ends with [DONE] on its own line. The patched Discord handler drops these messages before they reach the agent:

if content.strip().endswith("[DONE]"):

return

This is defined in each agent’s SOUL.md so they know when to use it:

“Looks good, merging now. [DONE]” “Thanks, I’ll report this to the human. [DONE]”

Simple, elegant, and it completely eliminates the loop problem.

Agent Identity and Git Credentials

Each agent needs its own git identity for commits and PR authorship. The entrypoint script configures this automatically:

git config --global user.name "$AGENT_NAME"

git config --global user.email "${AGENT_NAME,,}@agents.local"

echo "http://${AGENT_NAME}:${GITEA_TOKEN}@${GITEA_HOST}" > /root/.git-credentials

When Atlas opens a PR, it shows up as authored by “atlas” — not a shared service account, but an identifiable team member.

The Agent Configuration

Each agent is defined by two files:

config.json — The nanobot configuration. Model selection, Discord token, channel permissions (allowFrom controls which bots can trigger this agent), and MCP server connections for Linear:

{

"providers": {

"anthropic": { "apiKey": "${ANTHROPIC_API_KEY}" }

},

"agents": {

"defaults": {

"model": "anthropic/claude-opus-4-6",

"maxToolIterations": 100

}

},

"channels": {

"discord": {

"enabled": true,

"token": "${DISCORD_TOKEN}",

"allowFrom": ["${SENTINEL_BOT_ID}"]

}

}

}

SOUL.md — The agent’s identity and behavior. Name, role, team relationships, communication channels, workflow instructions, and the [DONE] protocol. This is the file that turns a generic LLM into a team member with a specific job.

The whole system deploys with a single docker compose up:

services:

atlas:

build: { context: ./agent-base }

environment:

AGENT_NAME: Atlas

GITEA_TOKEN: "${ATLAS_GITEA_TOKEN}"

# ...

volumes:

- "./agents/atlas:/agent-config:ro"

- "./worktrees/atlas:/workspace"

networks: [gitea]

What I Learned

Discord is a surprisingly good agent communication bus. It gives you message persistence, channel-based routing, and a UI where you can observe your agents talking to each other in real time. You can watch Sentinel delegate work, see Atlas ask clarifying questions, and follow the whole conversation like it’s a real team Slack.

The [DONE] protocol matters more than you’d think. Without explicit conversation termination signals, bot-to-bot communication devolves into politeness loops. This tiny convention — five characters — is what makes autonomous multi-turn agent coordination viable.

Agent identity creates accountability. When Atlas opens a PR, I know Atlas wrote it. When Sentinel creates tickets, I can see Sentinel’s reasoning. Each agent has its own commit history, its own PRs, its own Linear activity. This makes the system auditable in a way that a single monolithic agent never could be.

Planning and execution should be separate phases. Having Sentinel plan everything and wait for approval before delegating was critical. It means I can course-correct before any code is written. The agents are autonomous in execution but supervised in strategy.

What’s Next

This is still early. The agents can coordinate on parallel tasks, but they can’t yet handle merge conflicts autonomously. I want to add:

- Cross-agent dependency awareness (Nova waiting for Atlas’s API before building the frontend)

- Automated testing pipelines that agents can trigger and respond to

- More agents — a dedicated DevOps agent, a QA agent that writes and runs tests

The infrastructure is deliberately simple. Three Docker containers, a shared Git server, a Discord server, and a few JSON configs. No custom orchestration framework, no complex message queue, no agent-to-agent RPC layer. Just Discord channels and a convention for when to stop talking.

Sometimes the simplest architecture is the one that actually works.

The solution is built on nanobot. The agents run Claude Opus and coordinate via Discord, Gitea, and Linear.